Toolbox for System Designers

System design is one of the critical processes in building a reliable and sustainable software solution. That is why top-notch companies invest in their people who can design robust systems that could meet complex requirements. Most engineers struggle in getting this done right. There are various aspects that an experienced engineer should be aware of to take an informed approach to system designing. There are many moving parts, concepts, components, patterns, and details that should be considered to make a data-driven and informed decision.

Note: The topics discussed here would be more aligned with designing “web-based applications.” These may or may not apply to other software design like desktop applications. Feel free to comment below about any particular topic you think is “must include” in this article.

This article is an attempt to touch just the tip of the iceberg. My advice would be to read through the entire article before diving into details of a specific topic. Bookmark this article and revisit for future references and updates. Please share this with your colleagues so that you can collectively build robust systems. Comment below with your ideas to improve this article.

What are the prerequisites?

Knowledge

Technology startups, complex advanced technologies, and improved software development processes keep springing up faster than ever. It has becoming more difficult to cope up with the changes in the technology landscape. Knowledge is the awareness or familiarity gained by experience or learning of such advancements. Learning does not stop after college or getting a job. Knowledge about new technologies can be acquired by

- Subscribing to tech magazines

- Reading online articles

- Taking online or classroom courses

- Doing certification

Communication Skills

Communication becomes a critical skill for good system designers. Discussing new design concepts, challenges and frameworks with friends, peers, colleagues, and customers is a great way to improve awareness. Conferences, subject matter experts, and training can help in covering for the experience and knowledge gaps. System designers should be able to effectively articulate their thoughts and ideas as well as understand the requirement of the customers.

Things to be aware of during System Design

There are several concepts a system designer must be aware of to help in designing a software system in today’s technology landscape. An engineer doesn’t need to be ‘an expert’ in all of such concepts. Essential awareness and a good understanding of the core concepts should be enough to make the right design decisions.

Software Architectures

There are various ways to write, organize, and deploy software components. The most popular software architecture classifications are Monolithic, Modular, Service Oriented, Microservices, and Serverless. All these architectures differ mostly in the cohesive and coupling characteristics of different parts of the software.

- Monolithic Architecture is a single mammoth component that is designed to be self-contained and fully functional as an individual and end-to-end software.

- Modular Architecture is almost similar to monolithic architecture, but the code is well-organized as distinctively separate modules within the same software component. This is achieved through proper design using namespaces and packaging structures in the code.

- Service-Oriented Architecture is having distinct and separate software components servicing each other over the network through some communication protocols like SOAP, RPC, RMI, REST, etc.

- Microservice Architecture is an evolved version of a Service Oriented Architecture in which many small components have a single responsibility each. It is also possible that each of these microservices can have it’s very own ecosystem to exist independently of other components.

- Serverless Architecture is an exciting concept that has been picking up. It does not require to manage any server or cloud. BaaS (Backend as a Service) and FaaS (Function as a Service) are available that can be utilized to perform common computations.

Choosing the right architecture depends on factors like scale, size, level of decoupling, deployment strategy, technical skillset, etc.

Load Balancers

Load balancers are components in a deployment that would help balance the load on the application. It will help in making an application scale and improves its availability and reliability. Various load balancing strategies exist that help in making architectural decisions or troubleshooting bottlenecks. The most common strategies are Round Robin, Least Connections, Weighted, IP Hash.

Load balancers can also be configured to manage the sessions differently. We could implement a sticky session or a non-sticky / shared session strategy.

Sometimes load balancers can also be used as a proxy to an upstream server which is in a private cloud and cannot be directly accessible from the internet. This helps in providing a layer of security by masking it from unwanted traffic over a public network.

Load balancers can also either be hardware-based, cloud-based or software-based. The F5 load balancer is a hardware-based load balancer. AWS, Google Cloud and Cloudflare provide cloud-based load balancing. Nginx, Apache Server, HAProxy are all examples of software-based load balancers.

CAP and PACELC Theorem

CAP and PACELC theorems explain the trade-offs that a system designer will have to take while choosing an appropriate networked shared-data.

CAP theorem states that networked shared-data systems can only guarantee/strongly support two of the following three properties at a time:

- Consistency: A guarantee that every node in a distributed cluster returns the same, most recent, successful write. Consistency refers to every client having the same view of the data. There are various types of consistency models. Consistency in CAP (used to prove the theorem) refers to linearizability or sequential consistency, a powerful form of consistency.

- Availability: Every non-failing node returns a response for all read and writes requests in a reasonable amount of time. To be available, every node on (either side of a network partition) must be able to respond in a reasonable amount of time.

- Partition Tolerant: The system continues to function and upholds its consistency guarantees despite network partitions. Network partitions are a fact of life. Distributed systems guaranteeing partition tolerance can gracefully recover from partition failures.

PACELC Theorem is an extension of the CAP Theorem by introducing the absence of partitions and latency into the mix.

The properties considered in this theorem are:

- P – Partitions

- A – Availability

- C – Consistency

- L – Latency

In PACELC, the board is split across having partitions versus the absence of partitions. When there are Partitions (P), then the trade-off battle is between Availability (A) and Consistency (C). When there are no Partitions (E), the trade-off battle will be between Latency (L) and Consistency (C).

ACID and BASE Database Properties

ACID and BASE are two types of database principles adopted by almost all database storage systems. They are a collection of concepts that are used for maintaining consistency of data in the database.

- ACID Properties stands for Atomic, Consistent, Isolated and Durable. ACID properties imply that once a transaction is complete, the data is consistent and stable. ACID is popular among the RDBMS systems.

- BASE Properties stands for Basic Availability, Soft-state, and Eventual Consistency. BASE properties imply a softer approach towards consistency to embrace scale and resilience. The BASE properties are popular among the NoSQL and Document-based data stores.

Caching Techniques

Caching techniques are used to reduce the latency in a read-intensive system. A cache is some storage that is closer or faster to the source that is trying to read that information. The core concepts of caching techniques are:

- Loading the data – Focuses on how the cache loads the data. The data could be

- Loaded upfront during initialization.

- On-demand when some information is required.

- Synchronization – Focuses on an acceptable level of freshness of the data. The idea here is to decide when a record should expire and reloaded. Various expiry strategies to apply here are:

- Write-through: Overwrite the cached data when a write event occurs.

- Time-Based: Refresh the data after a specific period when it expires.

- Active Expiry: Reload the data whenever it is modified at the source.

- Eviction Policy: Focuses on maintaining an acceptable number of records in the cache. Loading too much data may eventually start adversely affecting the system by hogging RAM and other resources. Different eviction strategies applied are:

- Time-Based: The data will automatically be evicted after a specific time. This would help to maintain a decent size of the cache.

- FIFO (First-In-First-Out): The data loaded first would get evicted first.

- FILO (First-In Last-Out): The data loaded last would get evicted first.

- LRU (Least Recently Used): The data that list least accessed would get evicted first.

- Random: Data would get evicted at random.

- Deployment Strategy: Focuses on how the cache is deployed in the environment. The various strategies are:

- Single Instance Cache: Where there is only a single instance of cache available.

- Clustered Cache: Several replicas of the cache is maintained in a cluster.

- Distributed Cache – Where there is a central cache repository with multiple remote caches to service requests from the remote clients without increased latency.

Message Queues

Message Queues or Message Brokers are intermediary components that act as a channel between the producer and consumer services. The message queues are significant in situations where the producer sends a volley of messages as short bursts, and the consumer takes a comparatively longer time to process these messages. Having a queue that stands between the producer and consumer will help in not losing that information due to a large volume of messages sent to the consumer. In an architecture where the producer needs to send the information directly to the consumer, the producer may have to wait or drop the message before it can get a slow processing consumer to be available.

Cloud and Virtualization

Cloud service is a pool of resources, typically a collection of servers, that are connected and managed over a network. It enables the users to step-up and step-down on their resource needs as per demand and volume of traffic without worrying about running out of them.

Virtualization is using software on top of hardware to create multiple containers or virtual servers. These containers basically will be sharing the underlying hardware that includes the RAM, CPU, Disk, Network Bandwidth, etc. among each other. Virtualization is the foundation on which clouds are built.

There are too many aspects involved in Cloud and Virtualization, and I am trying to list some of them down here:

- Service Models

- IaaS – Infrastructure as a Service

- PaaS – Platform as a Service

- SaaS – Software as a Service

- BaaS – Backend as a Service

- Deployment Models

- Private Cloud

- Public Cloud

- Hybrid Cloud

- File Systems

- Network File System

- Network Attached Storage

- Storage Area Network

- Content Addressed Storage

- Cloud Storage

- Abstraction Types

- Hypervisors

- Containers

Service Discovery

Service Discovery is to automatically detect the service without the need to specify the hard-coded IP or hostname of a service. It becomes instrumental in a micro-services architecture when there too many services to handle, and every service is interacting with one or the other services. It would be chaos to change the destination IP/Port or in-case of service downtime. These micro-services uses service registries and a service discovery protocol (SDP) to accomplish this.

Technology Landscapes

There are a lot of tools and software already built, and using them would save time in re-inventing them. Having an idea of the domain and being aware of popular technology stack would help in making better decisions while designing the software system. Here are a few popular and trending ones.

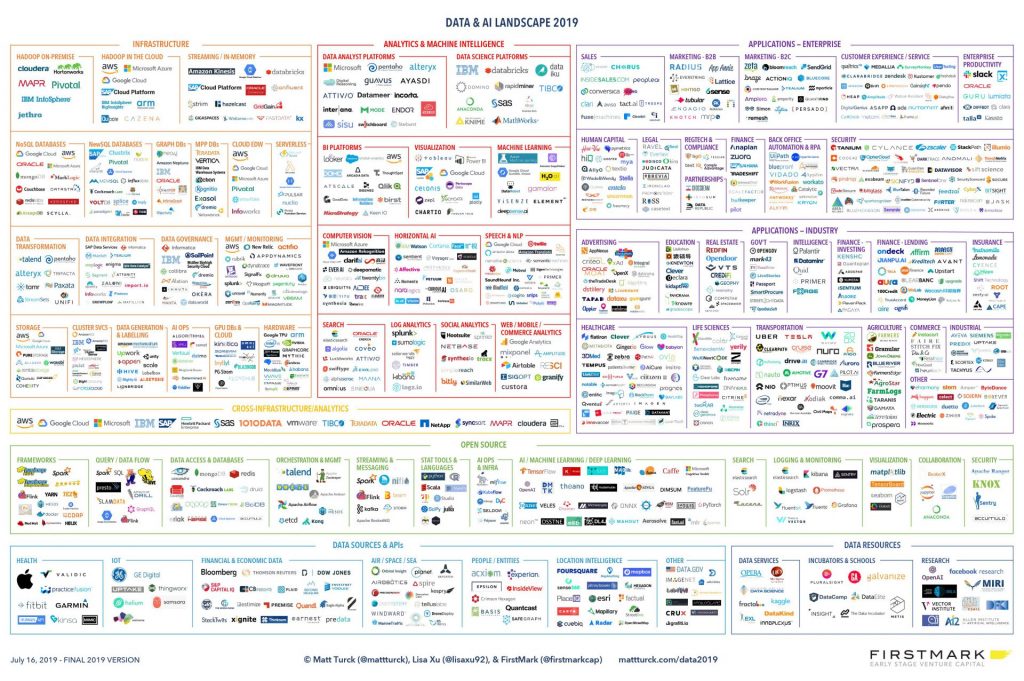

The Data & AI Landscape

Mart Turck’s article on The 2019 Data & AI Landscape has more information on what’s happening and the latest trends in Big Data and AI.

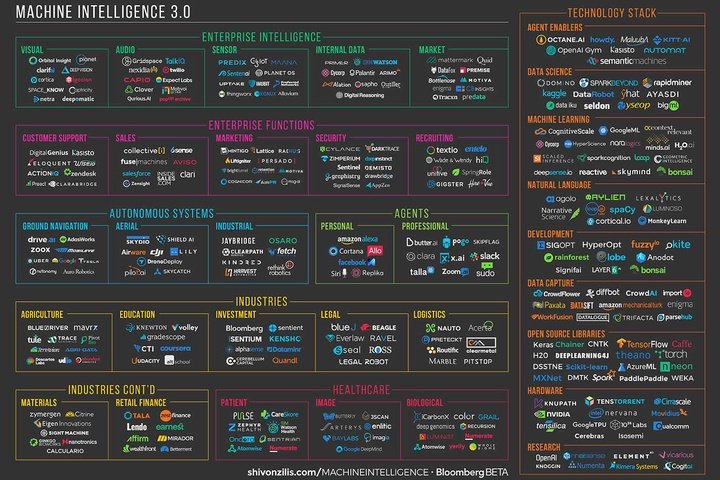

Machine Intelligence Landscape

OReilly and Shivon Zilis talks about Machine Learning 3.0 and the latest trends in Machine Learning.

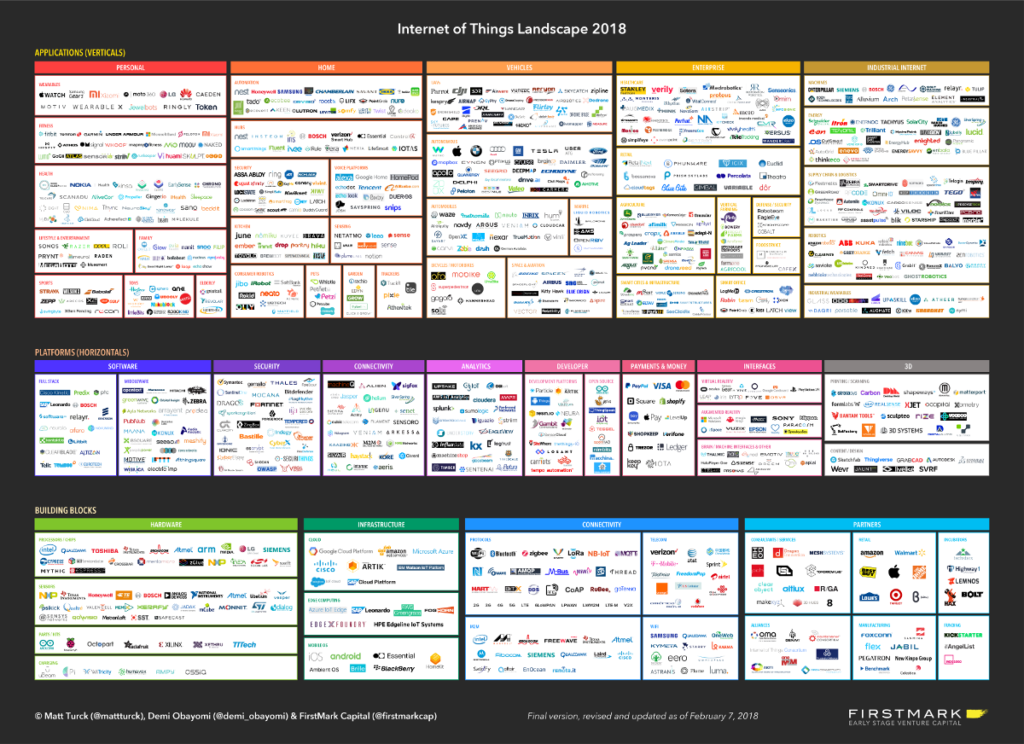

IoT Technology Landscape

Mart Turck’s article on IoT 2018 Landscape has more information on what’s happening and the latest trends in IoT.

And More…

Do not restrict to just the broad topics listed in this article. If you come across anything else that may be useful; Read about it, Learn it and do share it with us here in the comments below. We would like to know more about such interesting concepts that can be added to this list.